Nvidia Hopper Leaps Ahead

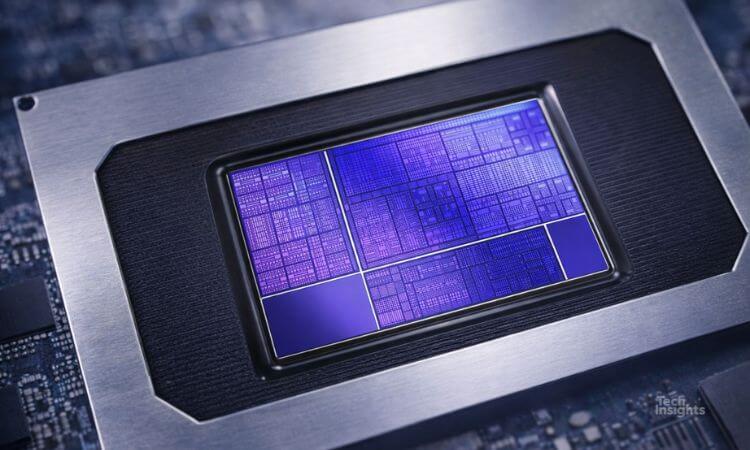

New 700W AI Chip Triples Performance, Adds FP8 Support

The next-generation AI architecture powers the H100 card and DGX-H100 system. The 700W flagship card triples peak performance over Ampere while adding FP8 support for more-efficient training.

Linley Gwennap

For two years, other AI-accelerator vendors have tried and failed to surpass Nvidia’s Ampere A100. Now, the bar is suddenly much higher, as the market leader introduced its newest AI processor, the Hopper H100. In its maximum configuration, the chip triples raw performance over the A100 while pushing power consumption to a scorching 700W. New features such as 8-bit floating-point (FP8) support and improved scalability promise even greater gains for training large networks.

The Hopper architecture doubles the number of MAC units per core, boosting performance at modest power gain. Using 4nm technology, the GPU packs more cores than the A100 while raising the clock speed as well. The new FP8 format doubles throughput compared with standard FP16 training.

The company offers the H100 in a custom SXM5 module or a standard PCIe card; the latter delivers about one-third less performance at 350W. Nvidia has also developed a new DGX-H100 system that combines eight Hopper chips. It employs next-generation NVLink technology to create high-bandwidth connections between systems, greatly increasing performance in clusters of up to 32 DGX systems. The company expects production shipments of H100-based modules, cards, and systems by September.

At the recent GTC, Nvidia also gave a brief update on its Grace processor, which is due to ship in 1H23. Grace will contain 72 Arm Neoverse CPUs and 198MB of on-chip cache while connecting to eight LPDDR5X channels. The processor will typically sit side by side with a Hopper GPU, forming what Nvidia calls the “Grace Hopper superchip” (after the celebrated admiral and computer scientist). In this configuration, the processor can feed data from 512GB of DRAM directly to the GPU through its coherent NVLink connections, accelerating performance on enormous models such as GPT.

Free Logic Newsletter

Get the latest analysis of new developments in microprocessors and other semiconductor products.

Subscribers can view the full article in the TechInsights Platform.

You must be a subscriber to access the Microprocessor Report

If you are not a subscriber, you can purchase a subscription or you can purchase an article. Enter your email below to contact us about access.