The AI Carbon Challenge: Why Your Next GPU's Memory Stack Is the New Emissions Hotspot

AI GPU production will account for 8.7% of all semiconductor sector emissions by 2030. The Global AI GPU Manufacture Carbon Emissions Forecast, 2026-2030, contains the critical data and strategic insights needed to navigate this accelerating environmental transition.

5 Min Read December 09, 2025

Artificial Intelligence is the defining technological force of this decade. Its astonishing capabilities are driving an unprecedented surge in demand for specialized hardware, primarily high-end GPUs and accelerators. Yet, beneath the headlines of performance records and market cap gains lies a hidden, accelerating environmental cost: the carbon footprint of manufacturing the hardware itself. While the embodied carbon from manufacturing GPUs isn't to the scale of AI's power consumption, it is far from insignificant.

At TechInsights, we’ve quantified this cost. Our inaugural Global AI GPU Manufacture Carbon Emissions Forecast, 2025-2030 , revealed concerning figures last year, and our latest update shows the scale of the challenge has grown significantly.

Emissions Trajectory: Twelvefold Growth

The numbers are stark. Manufacturing emissions from AI GPU production are projected to rise over twelvefold, climbing from 1.8 million metric tons of CO2 in 2024 to a projected 21.6 million metric tons by 2030. That's a staggering 64.5% annual growth rate. To put that in perspective, this single, high-growth product category is forecasted to account for 8.7% of all semiconductor sector emissions by the end of the decade.

The sheer silicon intensity required for each new accelerator is driving this growth. The average AI-GPU unit's embodied carbon is expected to exceed 1MT of CO2e by 2029—that’s nearly seven times the footprint of an H100 Hopper GPU.

The Great Carbon Shift: Memory is the New Driver

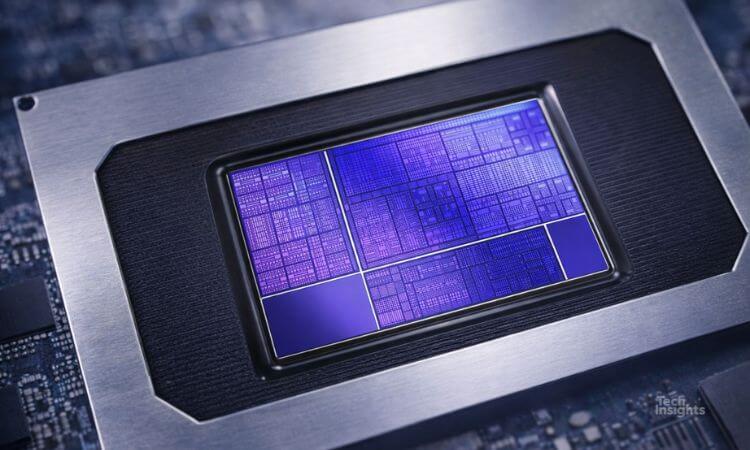

Why such an exponential acceleration? For years, the primary source of embodied carbon (the total greenhouse gas emissions associated with a product throughout its entire lifecycle) in a high-end chip was the massive GPU die itself. While chips like the NVIDIA H100 Hopper already approach the physical limits of lithography, the next generation of emissions growth isn't coming primarily from GPU die size—it's coming from memory.

AI accelerators are now complex, multi-die systems that rely on deep integration of High-Bandwidth Memory (HBM). The demand for HBM is causing an environmental multiplier effect. We project that the average accelerator will integrate roughly 250 HBM dies by 2030, representing a sixfold increase over current generations. This explosion in memory stacks means that HBM is rapidly becoming the dominant source of embodied carbon in advanced AI hardware, often outpacing the core compute die.

Compounding Complexity and Yield Loss

The complexity of these designs, —the use of multi-die packages, silicon interposers for packaging, and HBM counts— demands more materials and more energy-intensive fabrication steps.

Furthermore, yield losses,— an inevitable challenge at the leading edge of manufacturing, —act as a cruel carbon multiplier. Each failed GPU assembly represents wasted silicon, chemicals, and energy, increasing the effective carbon cost of every functional chip that makes it to market.

The AI hardware race is now a resource race. How will the industry manage the escalating embodied carbon cost of memory? Will a shift towards ASIC production exacerbate the problem? The full Global AI GPU Manufacture Carbon Emissions Forecast, 2026-2030 contains the critical data and strategic insights needed to navigate this accelerating environmental transition.

Explore our Global AI GPU Manufacture Carbon Emissions Forecast, 2026-2030

Already registered to the TechInsights Platform? Sign in or Request Access to explore the critical data and strategic insights needed to navigate this accelerating environmental transition.