The High-Stakes Race for Sustainable AI: Is HBM Stretching the Planet’s Limits?

HBM is becoming the backbone of AI, but its carbon and supply chain footprint is growing rapidly. Explore how surging demand for stacked memory is reshaping DRAM investment, straining wafer capacity, and multiplying emissions through complex packaging, yield loss, and duplicated fabs on carbon-intensive grids.

5 Min Read January 08, 2026

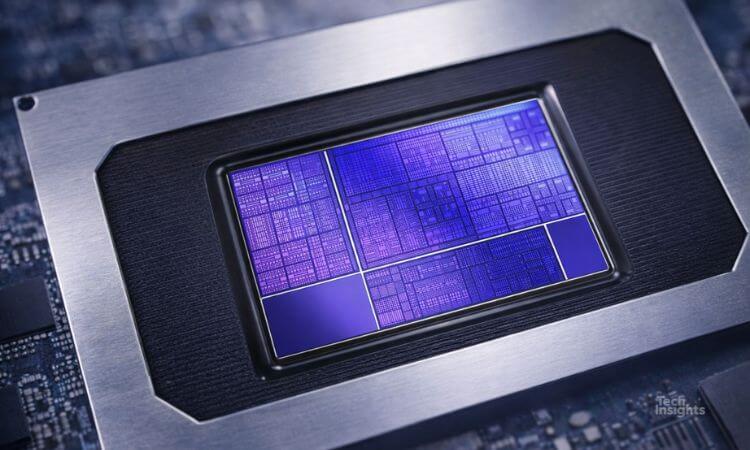

High-bandwidth memory (HBM) has quietly become the lifeblood of the AI revolution. As models grow more complex, the demand for memory that is faster, denser, and more efficient has shifted HBM from a niche technology to the new center of gravity for the DRAM market.

But as we push for "Intelligence," a critical question remains: Can the world sustain the supply chain and environmental costs of producing it? Our latest analysis on sustainable technology within the memory sector suggests we are entering a period of unprecedented "demand shock" that carries a significant carbon price tag.

Design and Cost Overview

The most significant cost contributors in the Echo Show 21 stem from its large display and its main enclosure materials. While the device adopts a much bigger screen than previous generations, Amazon maintains cost control through design integration and material optimization.

The enclosure system, built from durable plastics and metal elements, also plays a major role in non-electronic cost, reflecting Amazon’s intent to deliver a solid, premium feel without unnecessary complexity.

A $100 Billion Market with a Massive Footprint

The growth of HBM is no longer just a trend; it is an explosion. TechInsights projects the global HBM market will skyrocket from roughly US $16 billion in 2024 to nearly $100 billion by 2030.

This demand is so intense that capacity is largely sold out into 2026. To keep pace, manufacturers like SK Hynix, Samsung, and Micron are pivoting their capital expenditure aggressively. We forecast that HBM-dedicated capex will reach $27 billion by 2030—accounting for almost half of all DRAM capital spending.

However, building these advanced fabs creates a paradox. While HBM enables more energy-efficient AI operations, the semiconductor emissions generated during its fabrication are immense. HBM is both power-hungry to manufacture and capital-intensive to site, making the carbon intensity of the power grid where these fabs are located a primary concern for the industry's lifecycle footprint.

Geography, Resilience, and the Efficiency Gap

The push for supply chain resilience is leading to an expansion of HBM capabilities across the globe. While diversification into North America and Europe offers the chance to tap into cleaner electricity mixes, other regions are ramping up production using less mature processes and more carbon-intensive electricity.

In China, domestic players are hedging against export controls by accelerating HBM production. The challenge? These lines often rely on older tools and less optimized processes, leading to lower yields and higher emissions per usable stack. This "resilience vs. sustainability" clash risks inflating the global carbon footprint if efficiency doesn't scale as fast as capacity.

Towards "Sustainable Intelligence"

None of this means that AI growth is incompatible with climate goals. It does, however, mean that sustainability must become a core design constraint. We believe the path forward involves three practical shifts:

The race for AI performance is well underway. The next race is for sustainable performance while ensuring memory bandwidth grows without breaking the planet's limits.

Want the full data on HBM capex, regional emission profiles, and technology scaling?

Click through to access our comprehensive report: Memory at the Limits: Can the Industry and the Planet Afford the HBM Boom?