Blackwell Sets New AI Performance Bar

Author: Bryon Moyer

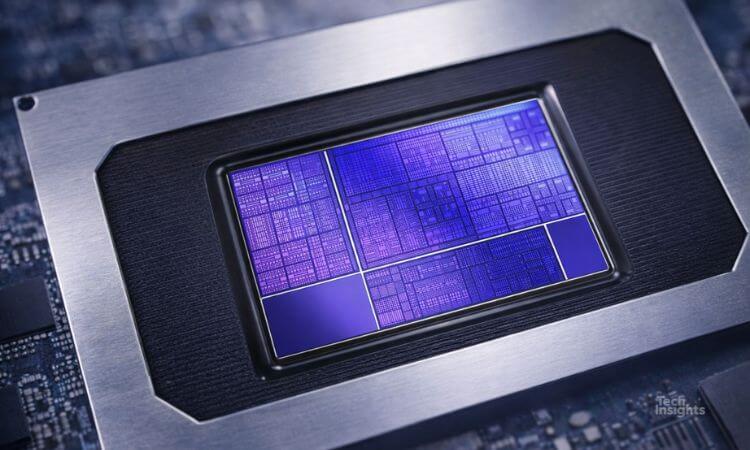

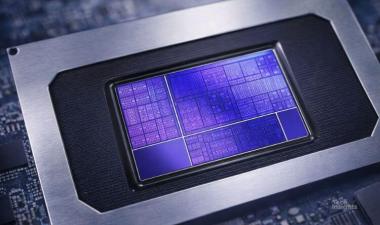

NVIDIA’s next-generation GPU, codenamed Blackwell, uses two chiplets to raise AI peak performance by 2.5× over the current Hopper generation. System performance may benefit more from the company’s NVLink communications protocol upgrade to Gen 5.

In addition to the B200 GPU chip, upon which we focus here, the company announced two servers (DGX B100 and DGX B200), a GB200 Grace-Blackwell module and server, an updated NVLink switch chip, updated switches, and a GB200 NVL72 data-center rack. It revealed little technical architectural detail, however.

NVIDIA builds the two reticle-sized dice on TSMC’s 4nm process—a surprise, since the market was anticipating this launching on 3 nm. The technology enabling the larger systems and pods includes not only the GPU, but also an updated NVLink interconnect and a new NVLink switch die. NVLink Gen 5 doubles bandwidth compared with the prior Gen 4. Extensive software and other services accompany the hardware.

Notably missing from the announcement was pricing; having two dice the size of Hopper on the same process suggests twice the price. Production is set to begin toward the end of the year, meaning that most deliveries will realistically begin in 2025—a delay from the expected mid-2024 launch.

The Blackwell codename is a tip of the hat to American mathematician and statistician David Harold Blackwell. He made his mark in probability, game, and information theory and holds several firsts as an African American, including induction into the National Academy of Science.