- Insights

Strategic Technology Intelligence

Leverage competitive technical and market intelligence to drive innovation and make informed, strategic product roadmap decisions

Key Industry Topics

Examine the fundamental topics influencing semiconductor innovation and strategic market decisions.

Empowering Semiconductor Innovation with Insight, Tools, and Industry Connections

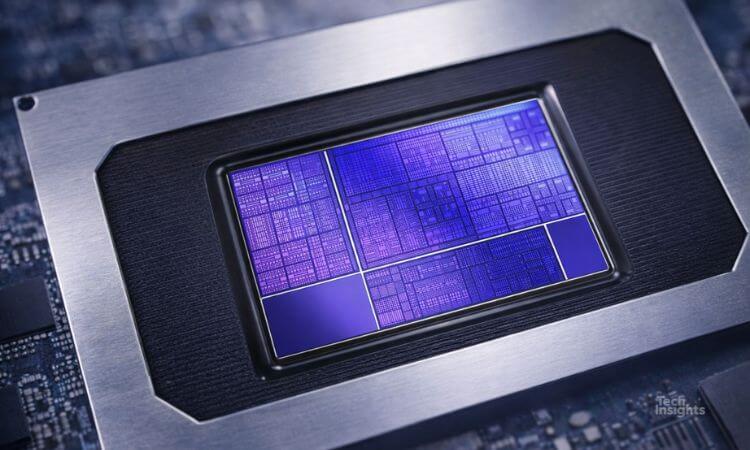

TechInsights empowers semiconductor and electronics leaders with deep technical analysis, tools, exclusive events, and industry connections—built to support R&D, product strategy, and competitive insight.

TechInsights Capabilities

Review how TechInsights supports the Semiconductor Industry with Technical to Market Analysis to help drive innovation, target markets, and mange cost and risk.

Our Experts

Check out the TechInsights experts that support customers. Detailed technical and market analysis with decades of experience drive innovation for the semidoncutor industry.

- Products

Capital Equipment Semiconductor Products

Power your roadmap with predictive insights from TechInsights and uncover what leading semiconductor players are doing next to help you stay ahead of the curve.

Key Features:

Capital Equipment R&D

Capital Equipment Sub-Systems and Components

Capital Equipment and Manufacturing Markets

Capital Equipment and Manufacturing Strategies

Capital Equipment IR Strategies

Capital Equipment Sales Strategies

Capital Equipment Sustainability

Fabless Semiconductor Products

TechInsights delivers critical insights that empower fabless semiconductor companies to optimize chip design, outpace competitors, and lead with innovation.

Foundry Semiconductor Products

Maximize foundry performance with TechInsights’ deep analysis and strategic intelligence—empowering sharper decisions and a competitive edge at every step of production.

Integrated Device Manufacturer Products

TechInsights equips Integrated Device Manufacturers with unmatched semiconductor intelligence and strategic analysis to accelerate innovation, outperform rivals, and deliver market-leading products.

Product Manufacturer Products

Elevate your Product Manufacturer's performance with cutting-edge insights and strategic analysis.

Government Products

TechInsights provides unparalleled analysis of semiconductor technologies, supply chains, and intellectual property to support national security, regulatory compliance, and policy development.

Automotive Products

TechInsights equips you with the insights you need to successfully navigate the automotive semiconductor market.

Mobile Carrier Products

TechInsights’ Mobile Carriers product delivers in-depth analysis and data-driven insights into the strategies of global mobile network operators.

- Resources

Don’t just react to the market—lead it.

TechInsights’ 2026 Outlook Summit

TechInsights’ 2026 Outlook Summit brings together leading semiconductor experts to decode the year ahead.

- Company

- Careers