History of the Microprocessor, Part 3

November 16, 2021 - Author: Linley Gwennap

As we celebrate the anniversary of the Intel 4004’s production release in November 1971, the history of the microprocessor has entered its sixth decade. Covering this much history in a single article is impossible, but fortunately, Microprocessor Report has been around for most of this period. On the occasion of the microprocessor’s 25th anniversary, we summarized the first 25 years of development. We updated this coverage for the 40th anniversary and now tackle the past decade.

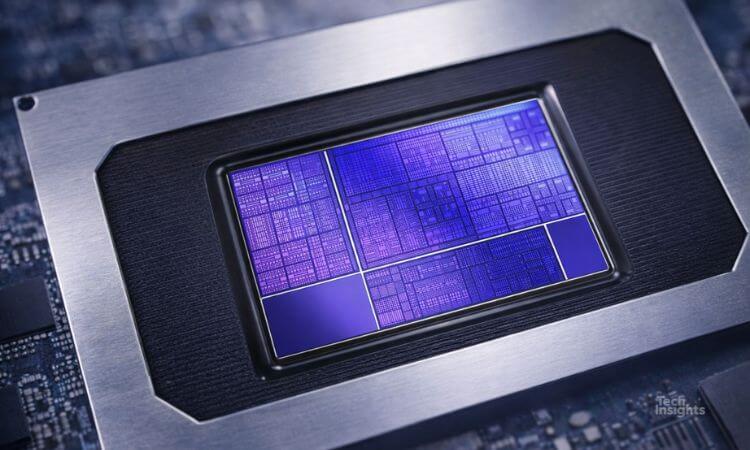

Over that more recent period, CPU microarchitecture has seen only evolutionary change. The big advances have occurred in specialized accelerators for workloads such as graphics, networking, and particularly neural networks (AI). This trend has reinvigorated the nearly moribund market for processor startups. For CPUs, the biggest new trend is RISC-V, which combines a microarchitecture devolution with an open-source business model. Together, these trends have spurred tremendous growth in processor sales and created new applications. Although x86 and Arm continue to dominate, the past decade has seen the rise of Nvidia.

An underlying trend during this time has been the stagnation of Moore’s Law. By 2012, the transistor-cost improvements began to fall short of Gordon Moore’s predicted rate, and more recently, the cost has barely budged thanks to soaring wafer prices. A few years later, transistor-density gains decelerated as well, with the most recent nodes providing little benefit. The lack of manufacturing progress has forced processor designers to seek alternative ways to boost performance.

Subscribers can view the full article in the Microprocessor Report.