Nvidia Grace Supports AI Acceleration

To tackle the largest AI models, Nvidia has designed a processor to feed its powerful new Hopper GPU. Grace has twice the memory bandwidth of any x86 processor and can hold GPT-3 in DRAM.

Linley Gwennap

Sometimes 80 billion parameters just isn’t enough. To tackle the largest AI models, Nvidia has designed a processor whose primary task is to feed data to its powerful new Hopper GPU. Hopper comes with 80GB of fast memory, enough to hold thousands of copies of ResNet-50, for example. But the biggest commercial models for language processing and recommendations simply won’t fit. Programmers must divide these enormous models among several GPUs, causing bottlenecks and other inefficiencies.

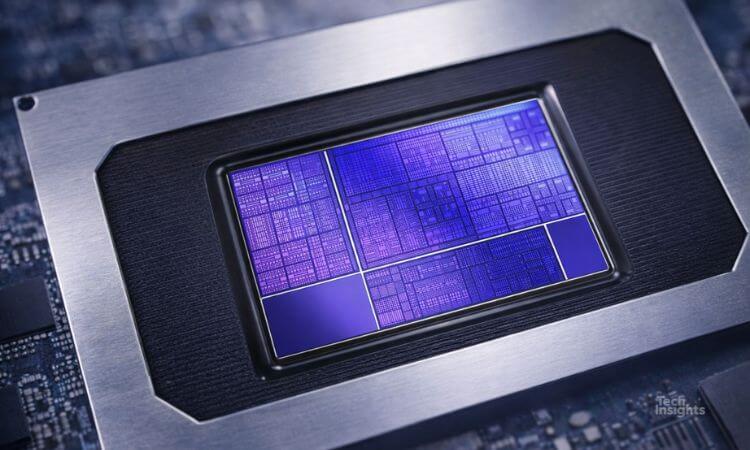

The new Grace processor aims to solve this problem. It can store up to 500 billion parameters in its capacious DRAM—enough to hold GPT-3, one of the largest models in use. Its 32-channel memory system delivers twice the bandwidth of any x86 server processor, and the chip connects directly to the GPU through high bandwidth NVLink, forming what Nvidia calls the Grace Hopper superchip (named for the computer pioneer and Navy admiral). The memory-coherent link enables programmers to easily share data between the two chips.

Built in TSMC 4nm, the processor also contains 72 Arm CPUs that manage the data transfers in addition to running an OS and other host-processor functions. Along with the Grace-Hopper module, the company is developing a module that holds two Grace chips, which could operate as server host processors. It expects to deliver production processors in 2Q23.

Grace is Nvidia’s first data-center CPU, complementing the data-center GPUs it has been shipping for years as well as data-center networking chips from the 2020 Mellanox acquisition. Nvidia also offers embedded processors such as Xavier and Orin for automotive and other applications.

The company previously worked with IBM to integrate NVLink interfaces in the Power8 and Power9 chips. By designing its own processor, Nvidia ensures it meets the needs of AI customers while minimizing cost and power.

Free Newsletter

Get the latest analysis of new developments in semiconductor market and research analysis.

Subscribers can view the full article in the TechInsights Platform.

You must be a subscriber to access the Manufacturing Analysis reports & services.

If you are not a subscriber, you should be! Enter your email below to contact us about access.