Huge accelerator capacity headlines do not compute

Last year, the public cloud delivered a colossal 3.9 trillion virtual CPU-hours of computing power, equivalent to a single processor hyperthread operating around the clock for 445 million years. Cloud providers used a minimum of 7.5 million processors to deliver this capability, with a market value of $39 billion, according to TechInsights’ Cloud and Datacenter coverage area.

But despite the rampant excitement about artificial intelligence (AI), cloud providers used a comparatively small 878,000 accelerators to deliver 7 million GPU-hours of computing power valued at $5.8 billion.

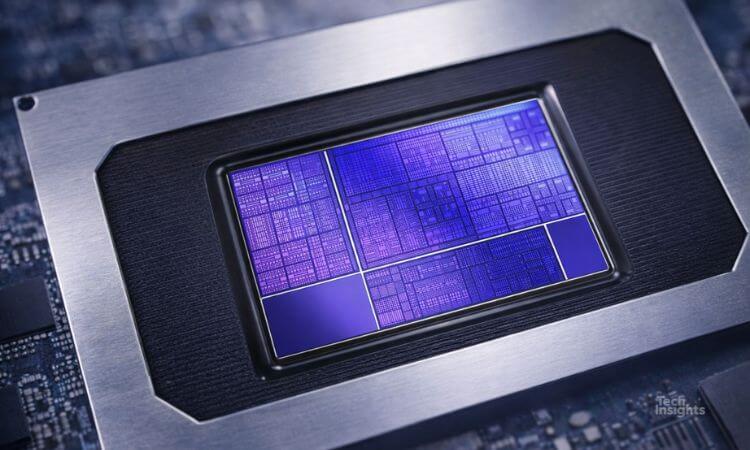

Unsurprisingly, market-leader Amazon Web Services (AWS) consumes the most processors and accelerators. Intel represents the lion's share of processors, followed by AMD and captive chips such as AWS's Graviton.

GPU giant NVIDIA dominates accelerator volume, with captive processors such as Google Cloud's TPU showing signs of adoption. Microsoft Azure stands out in having no custom processor or accelerator available today.

Two questions stand out from this data:

- Why are these figures so low compared to headlines about public cloud providers implementing huge capacities, particularly of GPUs?

- Why is accelerator use so low relative to processor use?

Plan for the peak

The cloud paradigm delivers value through two key attributes: letting customers scale their applications spontaneously without advance notice and letting customers access the latest technology without colossal capital expense.

Accelerators help cloud providers meet the second attribute. Accelerators are an innovative technology experiencing a lot of market hype that would be a significant expense for many enterprises to purchase outright. The public cloud democratizes access to these highly demanded and expensive resources.

Because most cloud resources are consumed on-demand, providers must provision far more capacity than they expect to sell. Overprovisioning allows customers to scale their applications spontaneously, with assurance of capacity. This consumption flexibility is the public cloud's primary value proposition, and cloud providers would suffer if they failed to deliver it.

A processor or accelerator shortage due to capacity constraints could affect many existing cloud provider customers who have developed their applications (and, in some cases, whole IT strategy) to use certain services that are inaccessible at critical moments. In the short term, some customers may feel frustrated; in the long term, some may abandon the cloud provider altogether due to worries about reliability and the resultant impact on revenue. So, cloud providers must plan for the peak; they can’t risk reputational damage because of capacity constraints.

Cloud providers have reported enormous figures for deployed peak accelerator capacity, although there have been reports of capacity shortages for GPUs. Such shortages are likely transient when many customers attempt to use capacity at precisely the same time. Cloud providers have recently launched new services to help smooth out bursty usage and better balance accelerator capacity. AWS allows advanced reservations through Capacity Blocks, and Google Cloud offers a Dynamic Workload Scheduler.

The utilization of processor and accelerator assets is far from 100% over a sustained period. The amount of actual resources used changes hour-by-hour. Cloud providers plan a significant buffer to absorb these changes in demand. They may have a considerable capacity of GPUs ready and waiting, but the demand for these will vary.

The figures described thus far represent the minimum number of accelerators used—ones that are being sold and generating meaningful revenue. They don't represent actual capacity. That explains the gap between these figures and the cloud providers’ assertions.

TechInsights obtained and analyzed data on cloud provider revenue, usage (from third-party cloud management platforms), price, and availability to calculate the number of active processors and accelerators used. But how do we know our numbers are reasonable? Perhaps cloud providers are selling accelerators in vast quantities?

Ultimately, it's simple math—if cloud providers were selling the full capacity of GPUs they reportedly have, their revenue would far, far exceed what they are reporting.

For example, AWS has publicly stated that its NVIDIA H100 processors are hosted in UltraScale clusters of 20,000 GPUs. These are now accessible in three regions, in one type of instance that provides access to 8 GPUs. Each UltraScale cluster can, therefore, support 2500 instances. We don't know how many clusters are in each region, so let's be conservative and assume one cluster per region.

If these UltraScale clusters were being used around the clock all year round at the on-demand rate of $98 per hour, they would generate $6.5 billion revenue—8% of all AWS's 2023 revenue and 16% of its revenue likely attributable to virtual machines. It is implausible that a single instance launched just three months ago and available in just three regions would so significantly contribute to AWS's revenue.

In fact, if each type of accelerator offered by AWS today were situated in a cluster of 20,000 in each region in which they are currently available and sold around the clock, they would generate 50% of AWS’s revenue for 2023. This is clearly impossible. Accelerators can only be partially utilized; otherwise, AWS's revenue numbers don't make sense.

Of course, many GPUs will be used internally to power machine learning and AI platforms. These won't generate revenue as part of a virtual machine sale, but they must still generate revenue from somewhere for the hardware investment to be worthwhile. A public cloud is far more than just infrastructure. AWS, for instance, has over 200 services and millions of product variations for sale. An AI application that only takes advantage of two of these—processors and accelerators—is missing out on all the other technologies that public cloud providers offer.

A rising tide raises all boats

Undoubtedly, accelerators, GPUs, ASICs, FPGAs, and beyond are of great interest to the industry. But it’s easy to forget that accelerators on their own are relatively useless. Accelerators need processors, storage services, platforms for code development, and databases and analytics services to be of value and worthwhile. A cloud application isn’t just a bunch of servers and GPUs. It will use a wide array of services provided by the cloud provider, and it will use application programming interfaces (APIs) and code to communicate with the cloud provider and to integrate services. Cloud applications are complex; they're not just virtual machines sitting on boxes.

So, although accelerators will grow in use, it won’t be at the expense of other services. All supporting IT services will need to grow, too. Accelerators and many other services will be responsible for cloud provider revenue growth.

Considering that, it's no surprise that the bulk of cloud provider active estates are processors. Processors don't just power virtual machines (although our data suggests half of cloud providers' revenues can be attributed to them); they also deliver other capabilities such as storage, databases, analytics, and beyond. Processors are used in every single hyperscale cloud service; accelerators are not.

Furthermore, most enterprise applications aren't using AI in all corners of their IT estates yet.

The fact that applications need many different cloud services to be differentiated and innovated threatens pure-play GPU cloud providers.

Conclusion

Today, accelerator numbers are relatively small. The TechInsights Datacenter and Cloud coverage area will deliver its forecast in the first quarter of 2024, but clearly, accelerator use will increase over time. But so, too, will everything else available in the cloud.

The rising tide of cloud adoption will raise all boats: infrastructure, platform, and everything else, as well as AI. Cloud providers will continue to procure more capacity to deliver their crucial differentiation: spontaneous scalability. But this capacity is unlikely to be consumed around the clock. Cloud providers aren't stockpiling capacity but are provisioning excess, so when demand spikes come, it's there when needed.